Recycle Classifier

Project type: Individual Project

Timeline: 9 weeks

Tool: Teachable Machine

Language: P5.js and ml5.js

Background and Goal

From groceries to pizza, delivery apps and services have made my life easier than ever before. I often find myself choosing convenience over traditional shopping methods, which has significantly increased the amount of packaging waste I need to sort. Determining whether containers from supermarkets or restaurants are recyclable can be tedious and unclear. While recycling has always been important, the surge in waste during the pandemic highlighted the need for a better solution. This inspired me to explore how machine learning could help distinguish recyclable materials from non-recyclable ones.

For this project, I used Google’s Teachable Machine, a user-friendly and web-based machine learning platform, to train a custom image classification model using a dataset of over 500 images. I also referenced Coding Train tutorials to learn JavaScript, p5.js, and ml5.js, which I used to build a demo website showcasing the model in action.

Image Classification

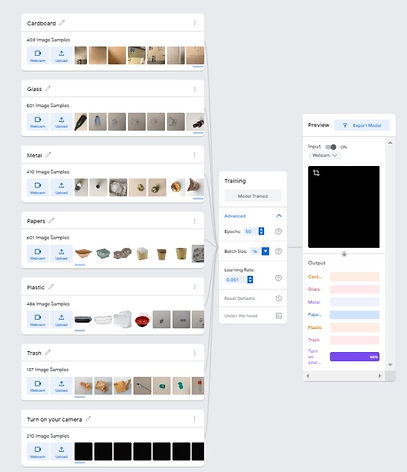

Imagine you are in front of a garbage bin and trying to figure out whether the material is recyclable or not. This experience can be even more frustrating if the material is recyclable but there are no markings for recycle symbols or logos. With image classification, it could be easier to identify if the material is recyclable. With Google’s Teachable machine, I trained a machine-learning tool with over 100 pictures in each of 6 categories of objects: Cardboard, Glass, Metal, Papers, Plastic, and Trash. To avoid the model incorrectly identifying a black screen as an object, I added a 7th category -Turn on your camera - which is just a black screen.

Teachable Machine Training

I wanted to improve how well my model could recognize "Trash" items, since this category was the hardest to predict accurately. Trash items can often look similar to other categories like plastic or paper, so the model needed extra help learning to tell them apart.

To train the model, I used Teachable Machine, which lets you adjust settings to improve how your model learns.

There are three settings you can change:

-

Epochs: how many times the model looks at your entire dataset during training.

-

Batch Size: how many samples it looks at in one go.

-

Learning Rate: how quickly the model tries to learn patterns.

At first, I used the default settings: 50 Epochs and a Batch Size of 16. But the results weren’t accurate enough, so I started experimenting with different settings. I decided to only adjust the Epochs and Batch Size to see if that improved performance—because changing the Learning Rate can affect results in unpredictable ways, especially with larger datasets.

To test what worked best, I trained the model 20 times with different combinations. Each time, I checked the "Loss per Epoch" graph, which shows how much the model is still struggling to make correct predictions. A lower loss means better performance. I noticed that after a certain point, increasing the Epochs didn’t make the model any better—it stopped improving.

Interestingly, even when I used the same settings, I got slightly different results each time. But eventually, I found that using 65 Epochs and a Batch Size of 512 gave me the most accurate predictions for the Trash category.

Customized Teachable Machine Interface

After training the model with images, I exported the trained model and downloaded it locally on my computer. My initial area of focus was to customize a dashboard to show how many objects of each type I threw out by using the model to classify them. I watched online tutorials such as Coding Train to understand how p5.js worked and tried to learn about visualization. However, because of my unfamiliarity with Javascript, I had to scale back my expectations and the dashboard became my long term project rather than a short term goal.

Demonstration

For the demonstration, I recorded a video to show how I tested the trained model and what the results looked like. At first, I had some difficulties with lighting and camera distance, which affected the model’s ability to recognize objects. To improve accuracy, I added a dark background behind the items to help the camera focus and reduce distractions.

Findings

-

Unlike training the model, real-time image classification is not easy. The results varied depending on the surrounding environment such as lighting and motion, especially with different shapes of objects and materials.

-

The trash category was tricky because it could belong to one of the other existing categories depending on how images were captured.

-

The model misclassified if the objects were a little too far away from the camera. I tried to setup a non-white background because the classifier often detected a white background as a paper.

Source

-

Rosenbaum, E. (2021, May 22). Is recycling a waste? Here’s the answer from a plastics expert before you ditch the effort. CNBC. Retrieved May 28, 2021, from https://www.cnbc.com/2021/05/22/is-recycling-a-waste-heres-the-answer-from-a-plastics-expert.html.

-

Sharp, S. R. (2021, June 8). New Machine Learning Can Match Ancient Pottery Fragments.i> Hyperallergic. Retrieved June 8, 2021, from https://hyperallergic.com/651726/machine-learning-matches-ancient-pottery-fragments-tusayan-white-ware/.

-

Teachable Machine FAQ. Teachable Machine. (n.d.). Retrieved from https://teachablemachine.withgoogle.com/faq.